XG-CBS

Explanation-Guided Conflict-Based Search (XG-CBS) extends CBS for MAPF to account for interpretability and thus producing more easily validated plans.

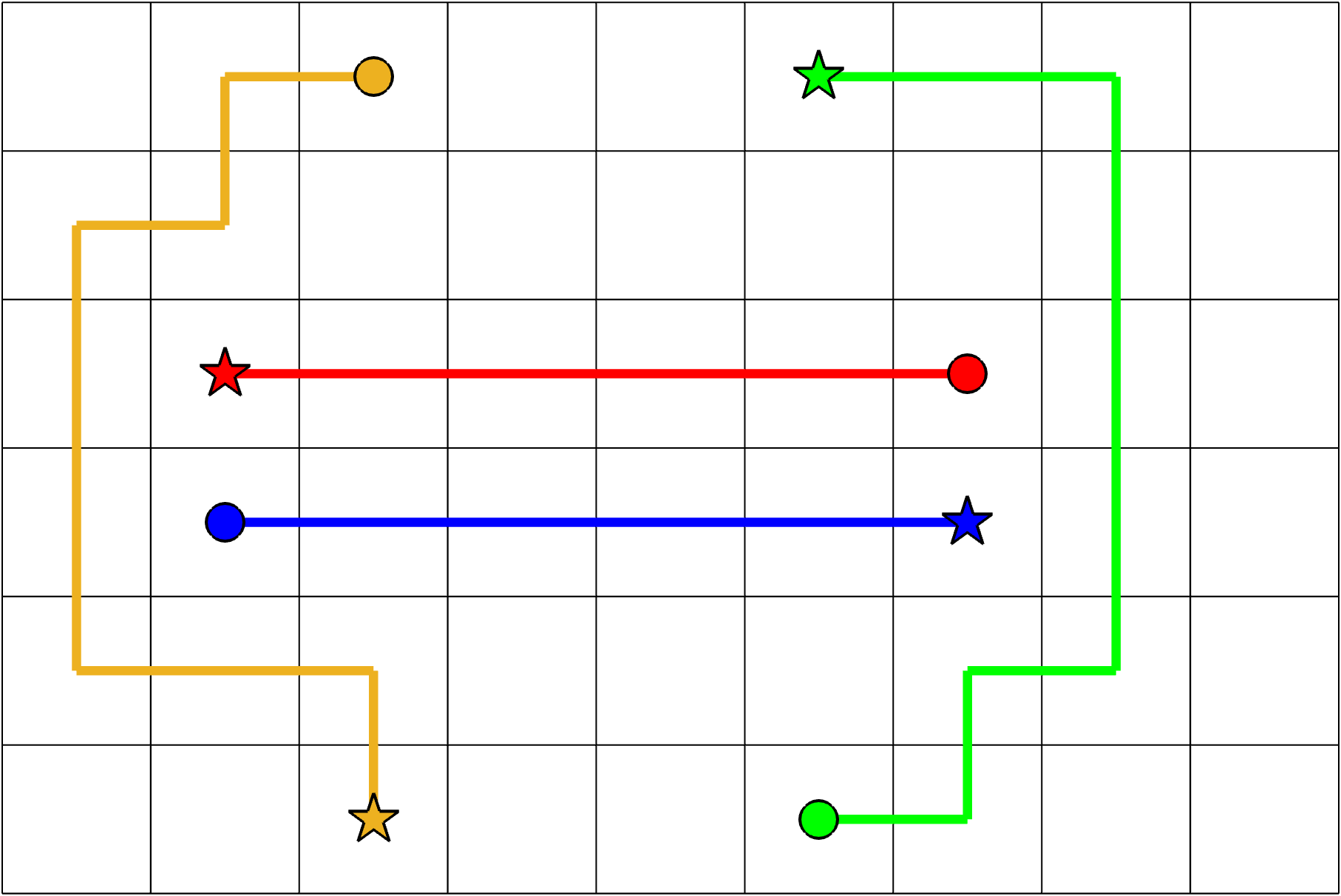

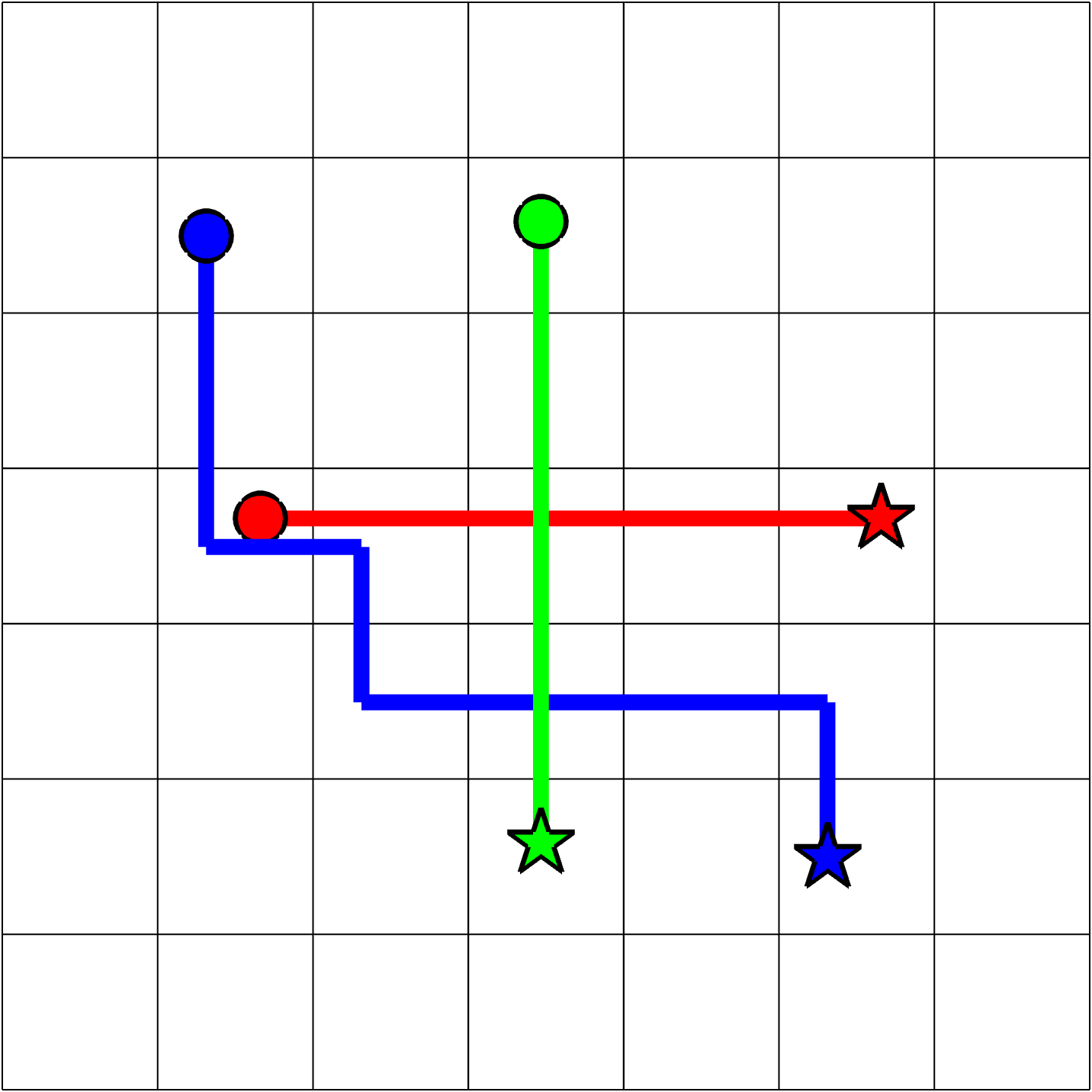

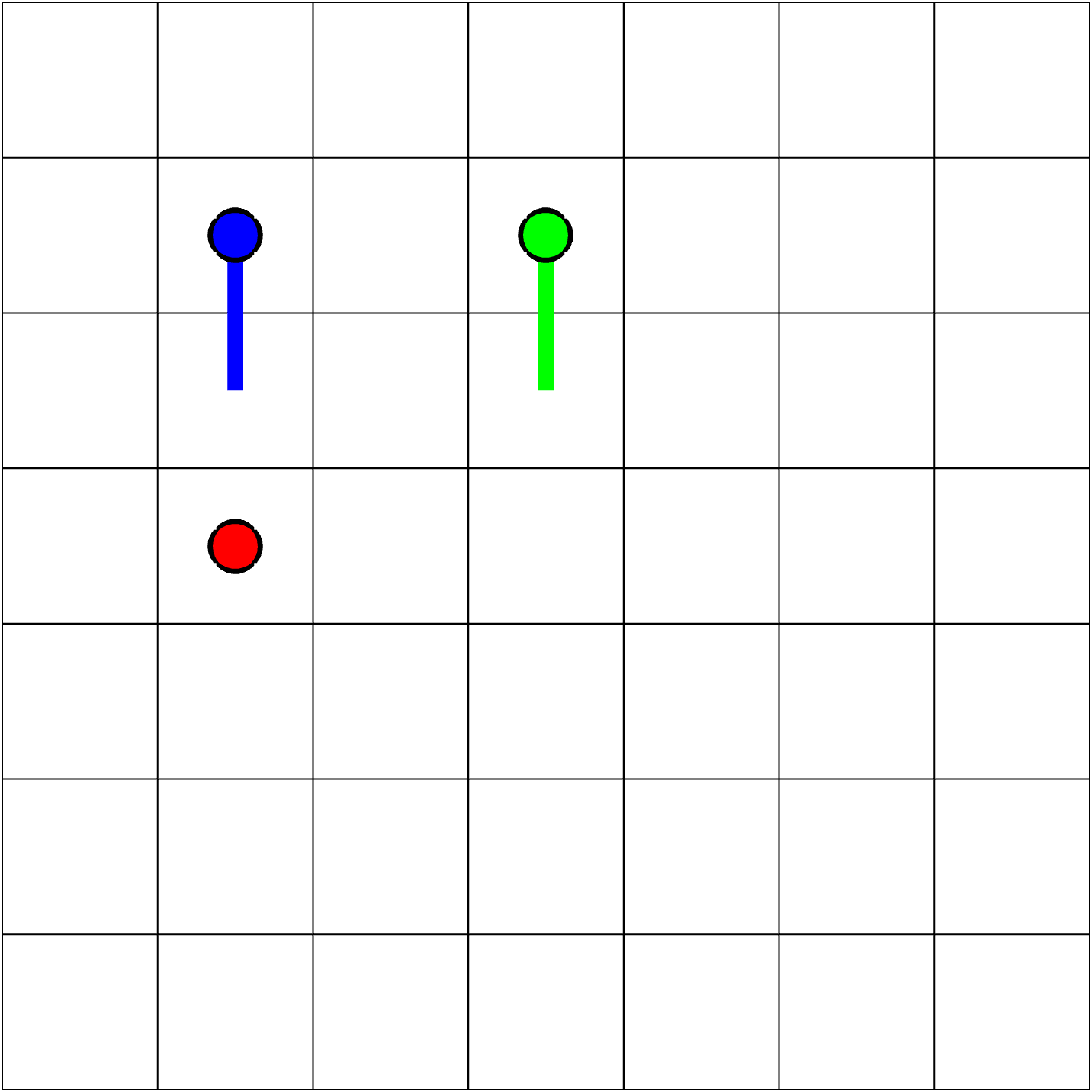

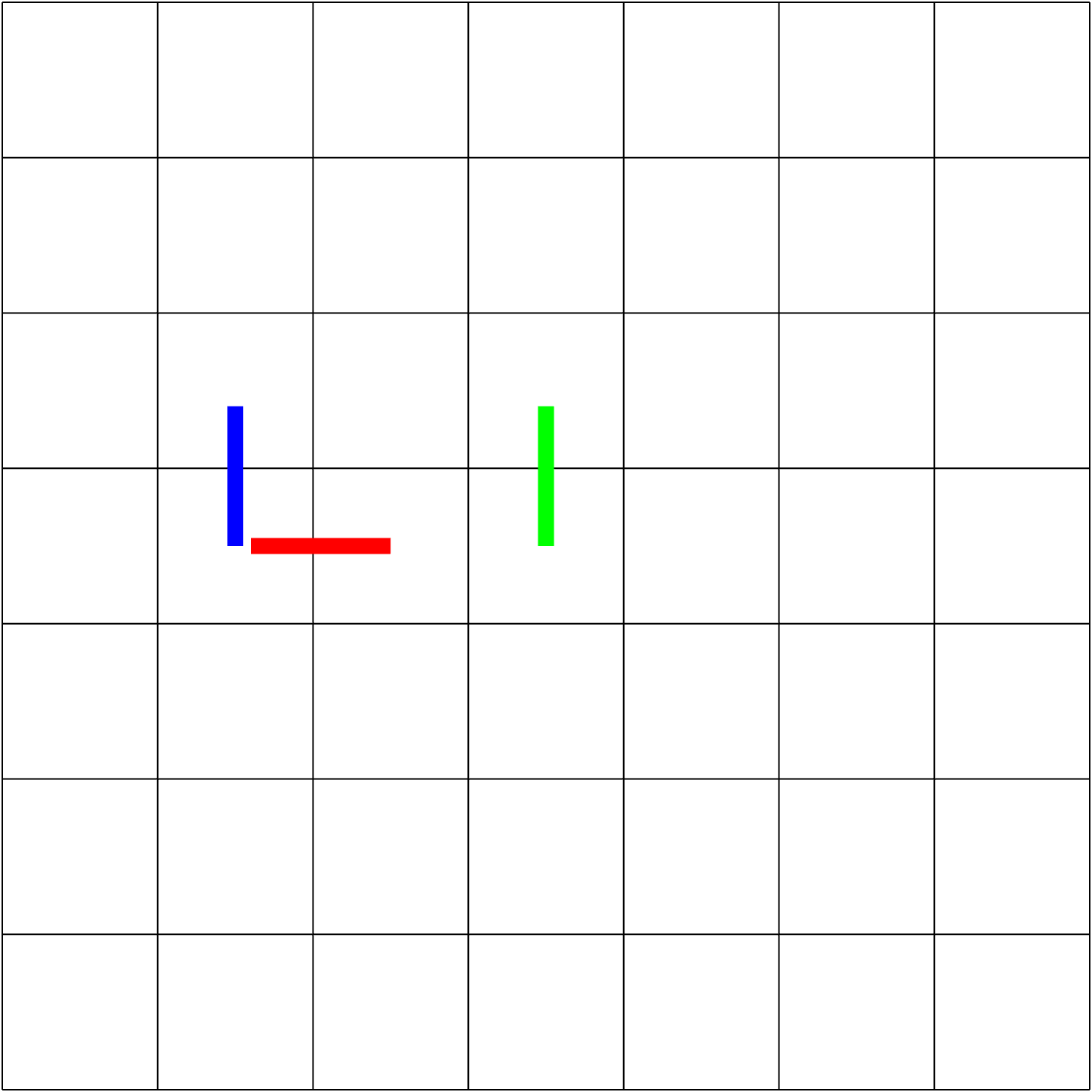

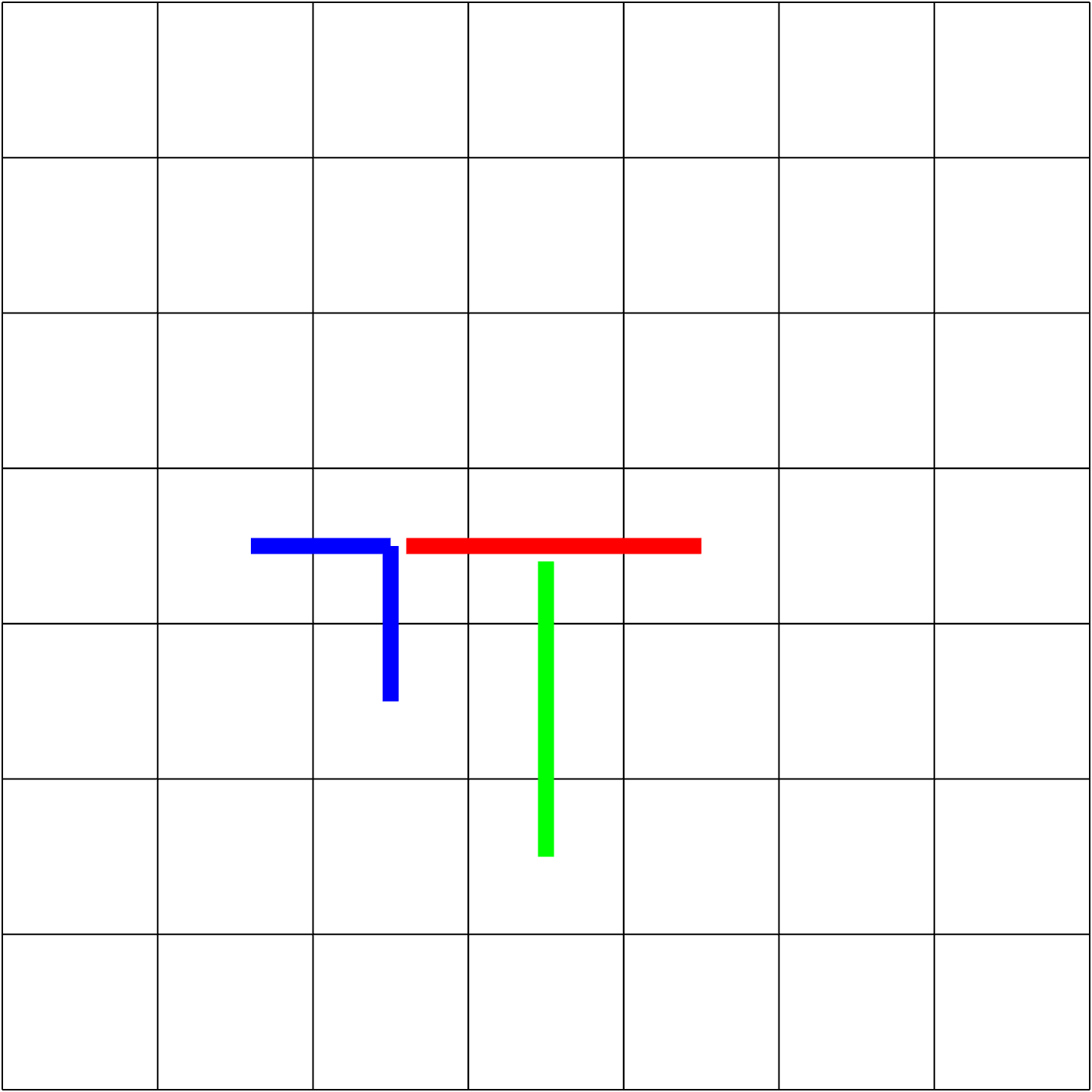

The goal of the Multi-Agent Path Finding (MAPF) problem is to find non-colliding paths for agents in an environment, such that each agent reaches its goal from its initial location. In safety-critical applications, a human supervisor may want to verify that the plan is indeed collision-free. To this end, a recent work introduces a notion of explainability for MAPF based on a visualization of the plan as a short sequence of images representing time segments, where in each time segment the trajectories of the agents are disjoint. Then, the problem of \emph{Explainable MAPF via Segmentation} asks for a set of non-colliding paths that admit a short-enough explanation. Explainable MAPF adds a new difficulty to MAPF, in that it is \NP-hard with respect to the size of the environment, and not just the number of agents. Thus, traditional MAPF algorithms are not equipped to directly handle Explainable MAPF. In this work, we adapt Conflict Based Search (CBS), a well-studied algorithm for MAPF, to handle Explainable MAPF. We show how to add explainability constraints on top of the standard CBS tree and its underlying \(A^*\) search. We examine the usefulness of this approach and, in particular, the trade-off between planning time and explainability.

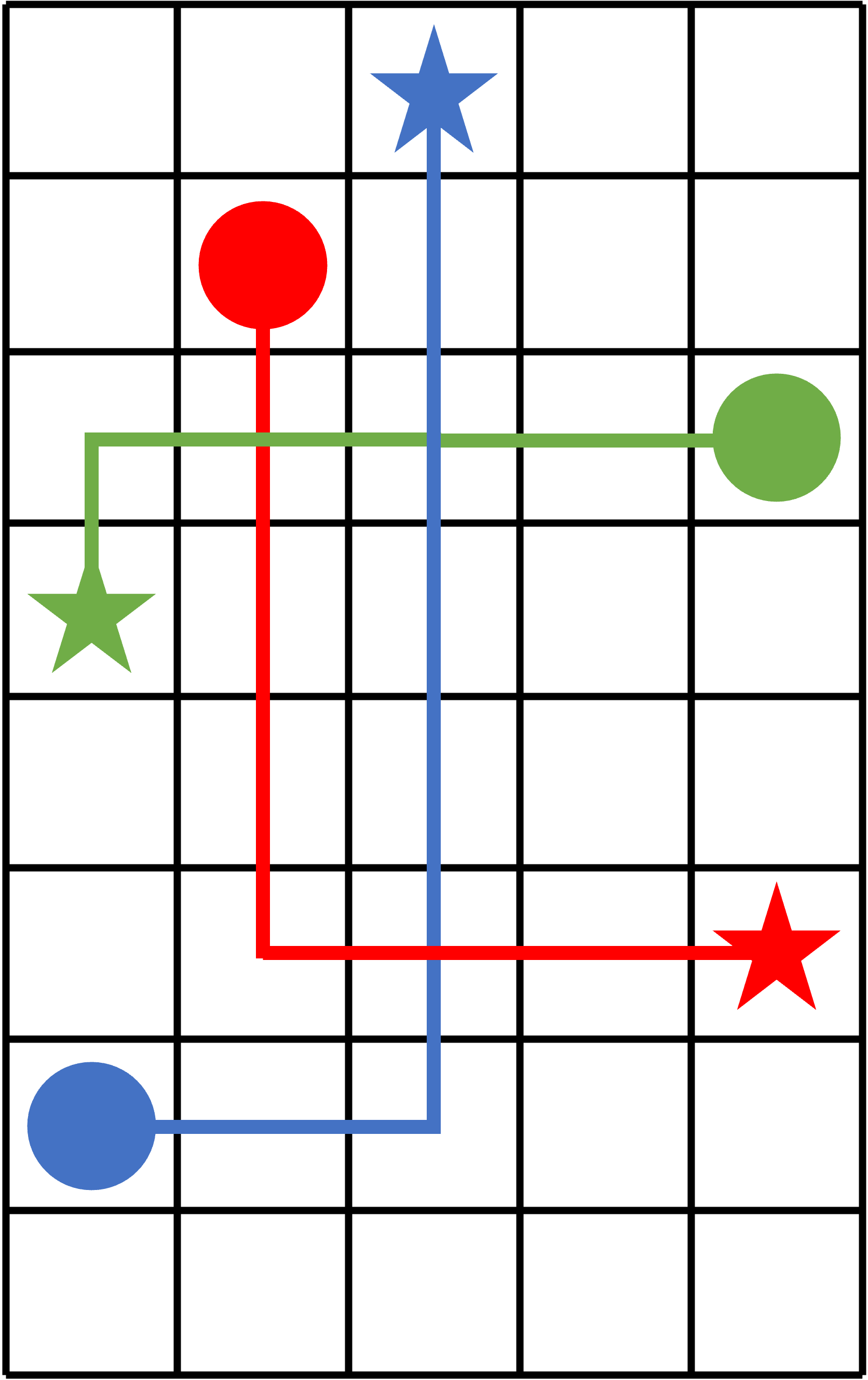

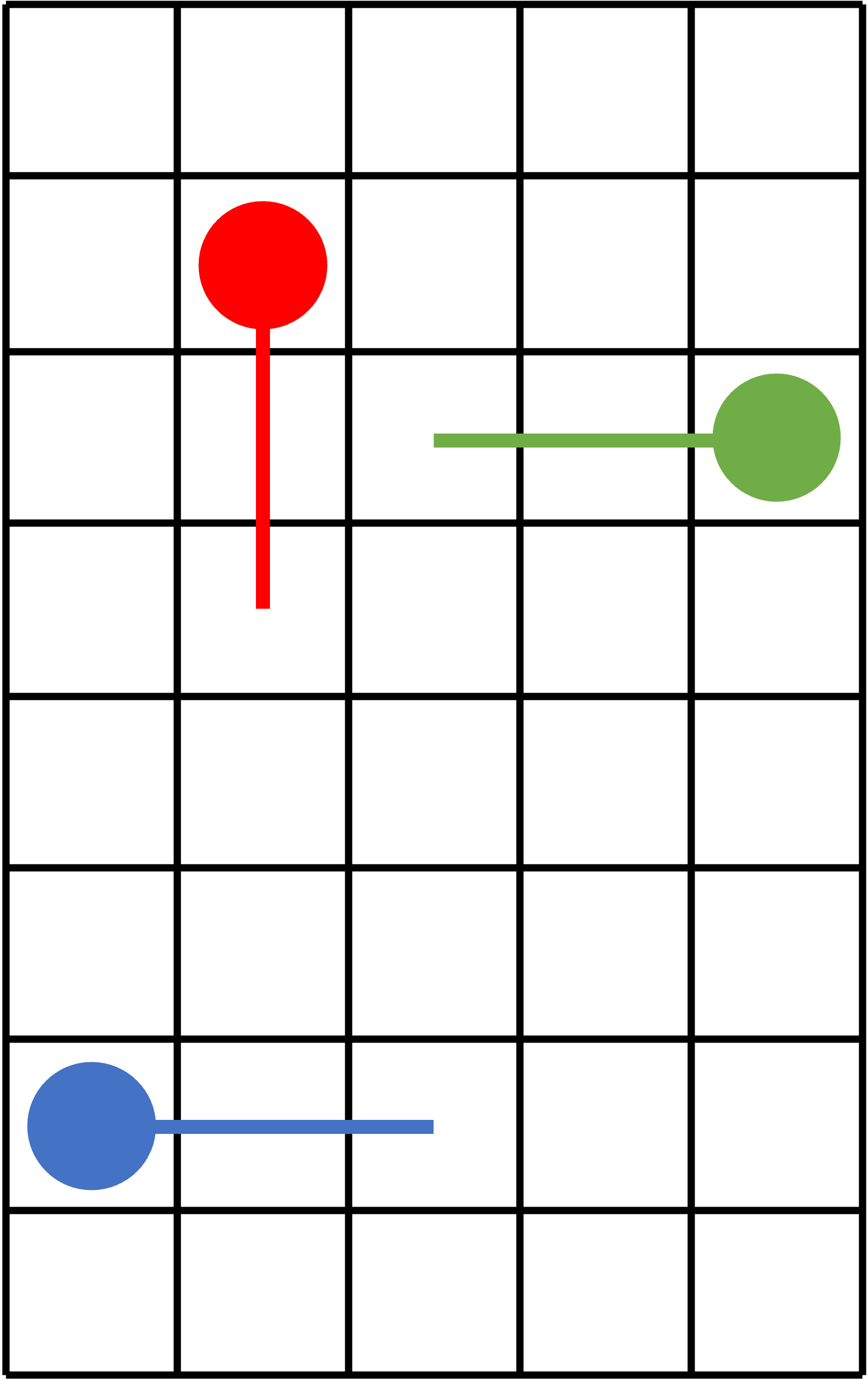

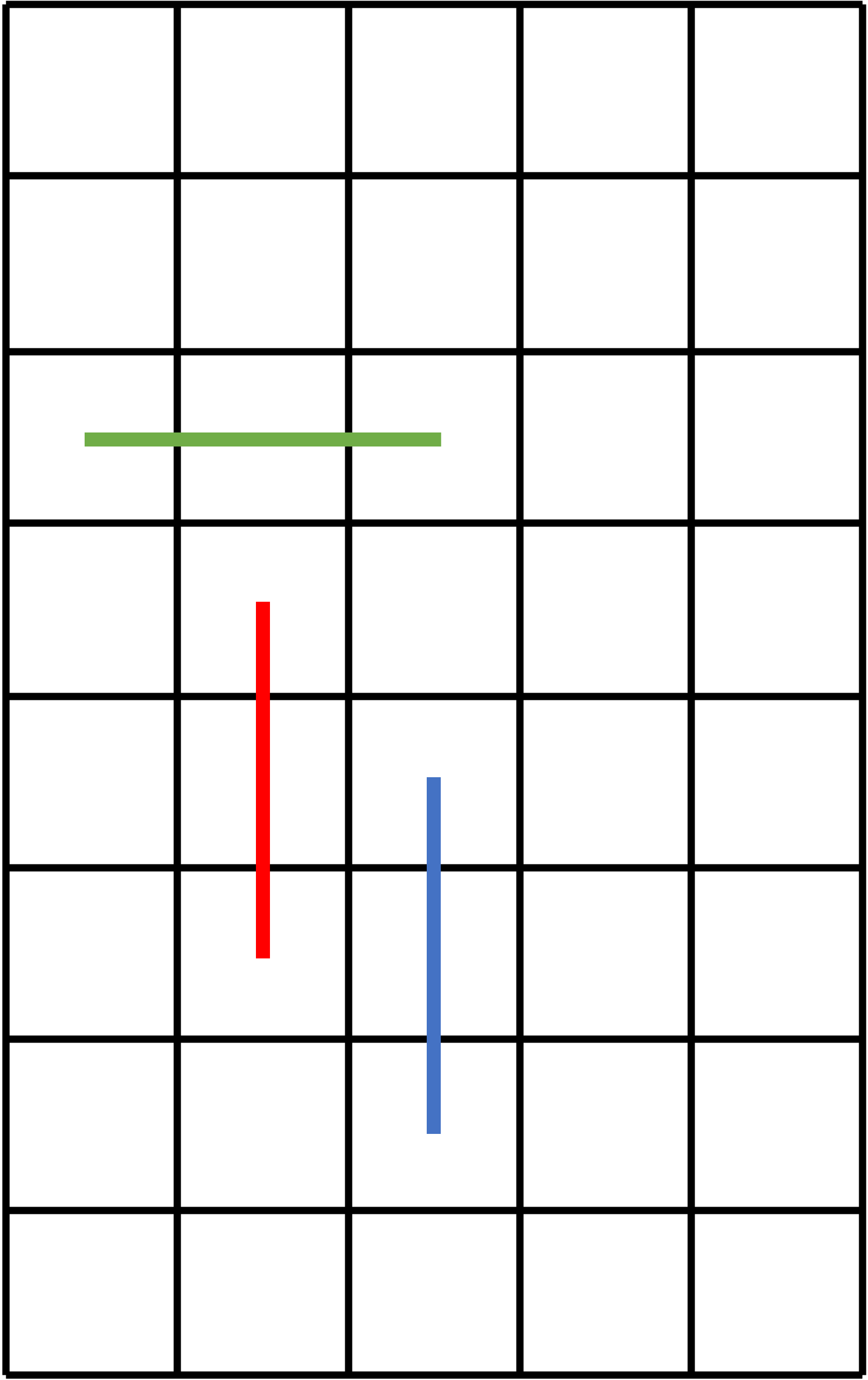

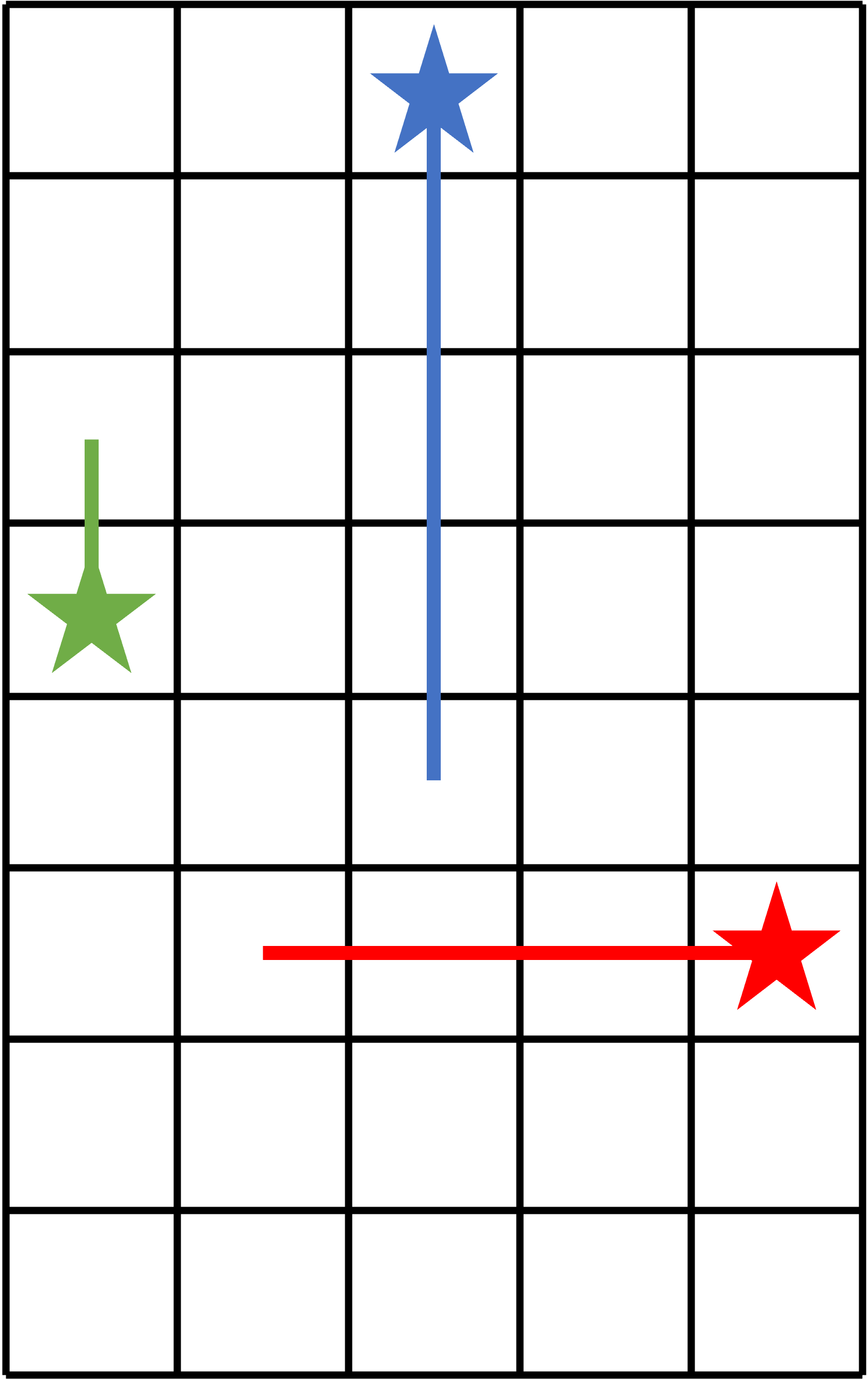

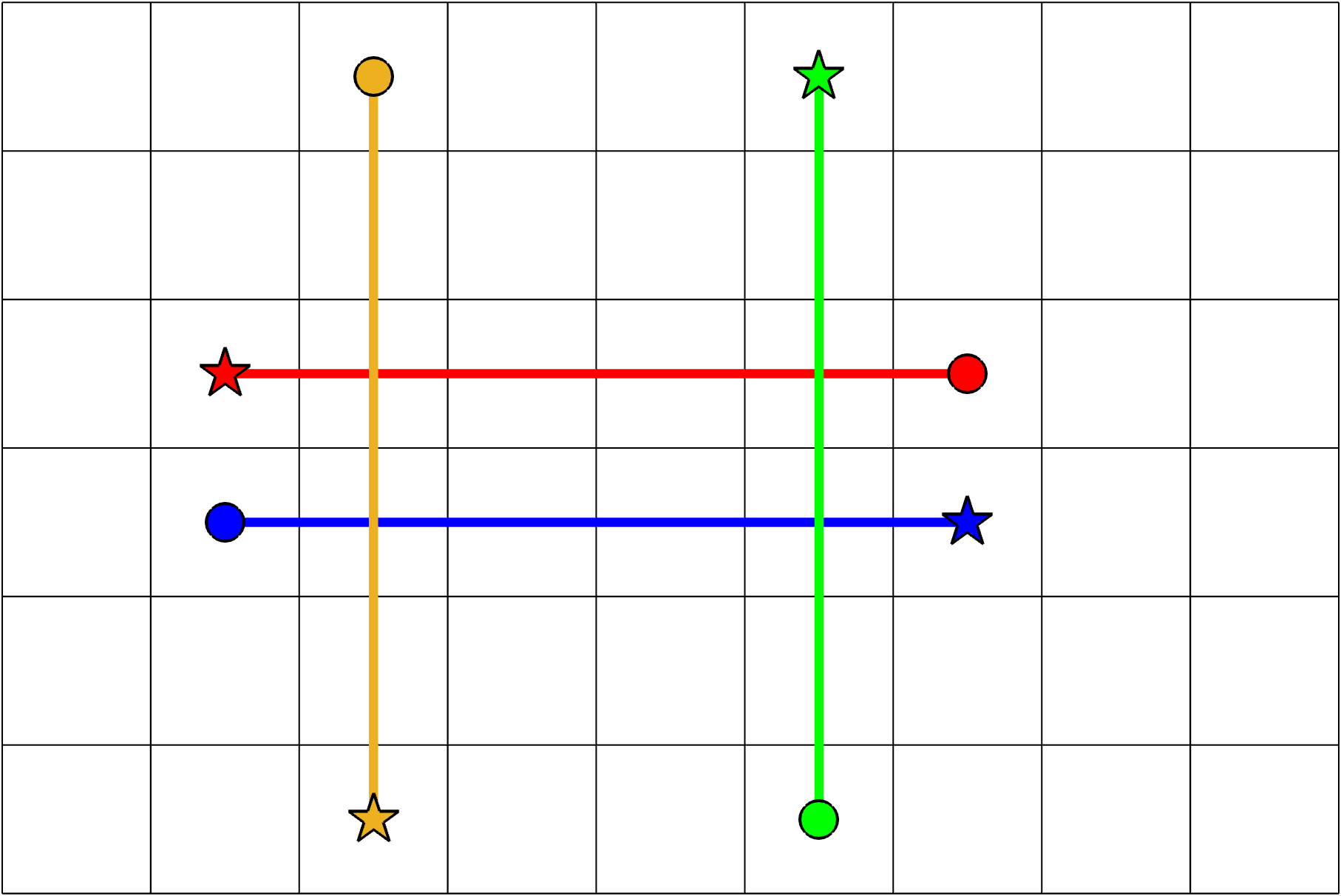

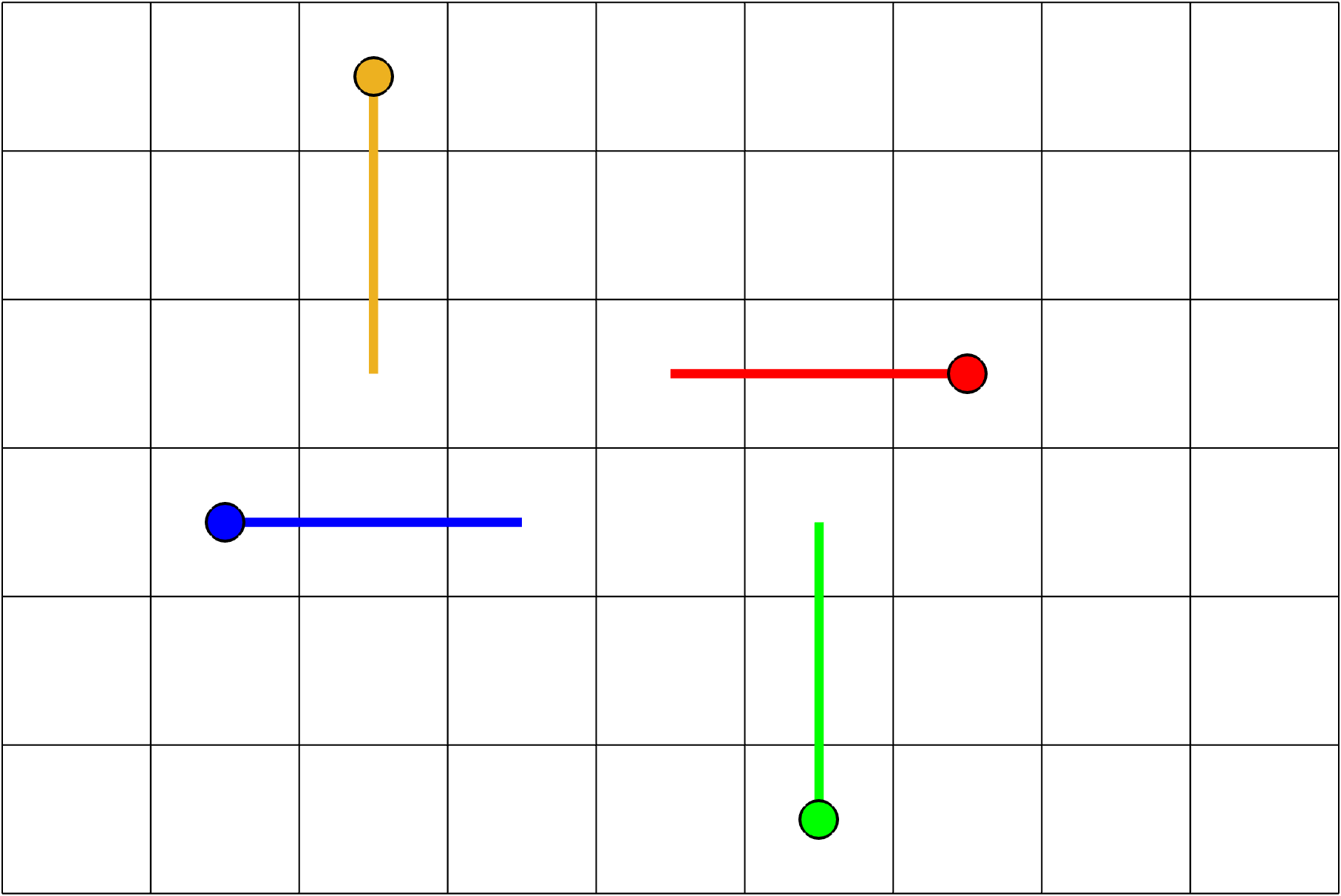

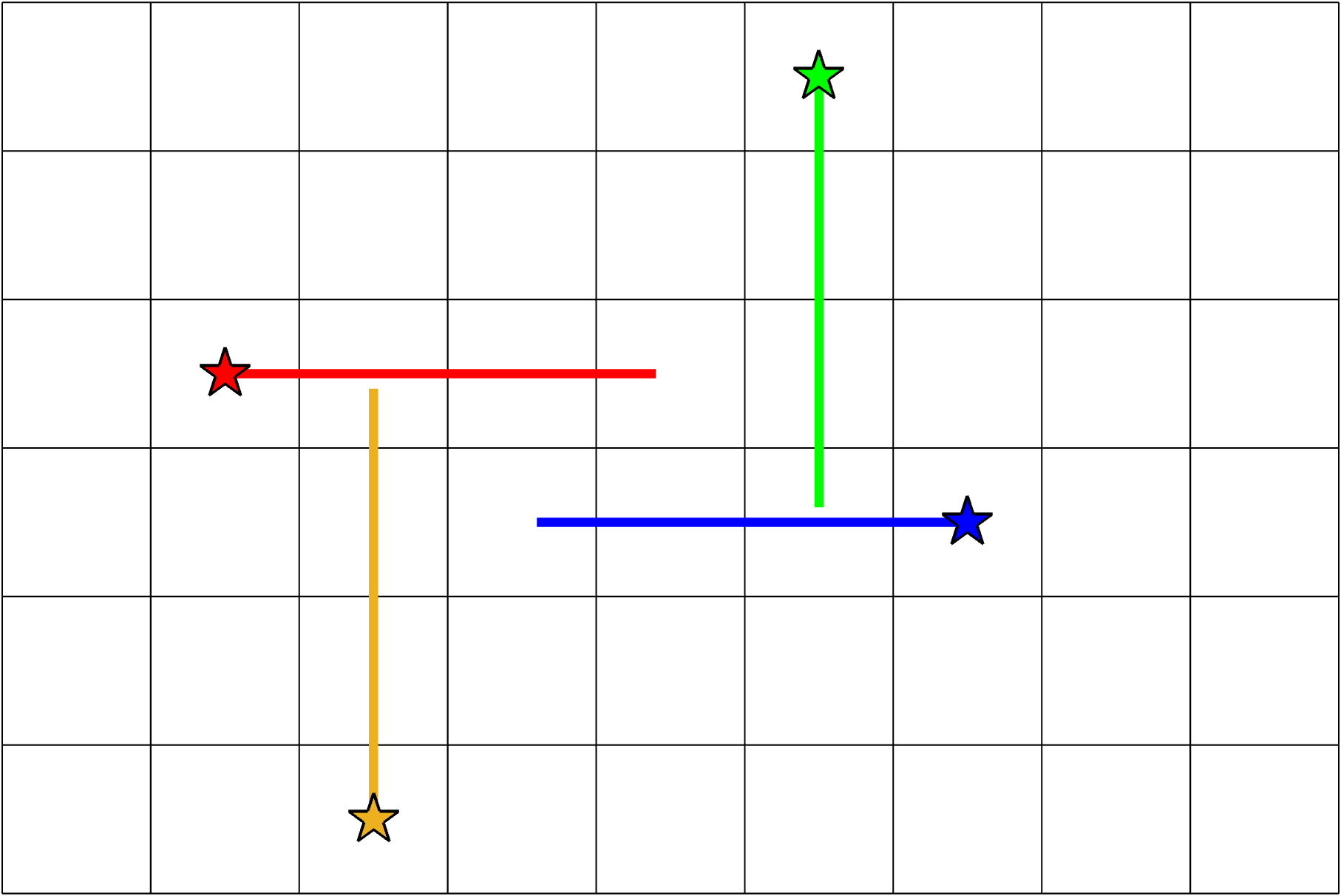

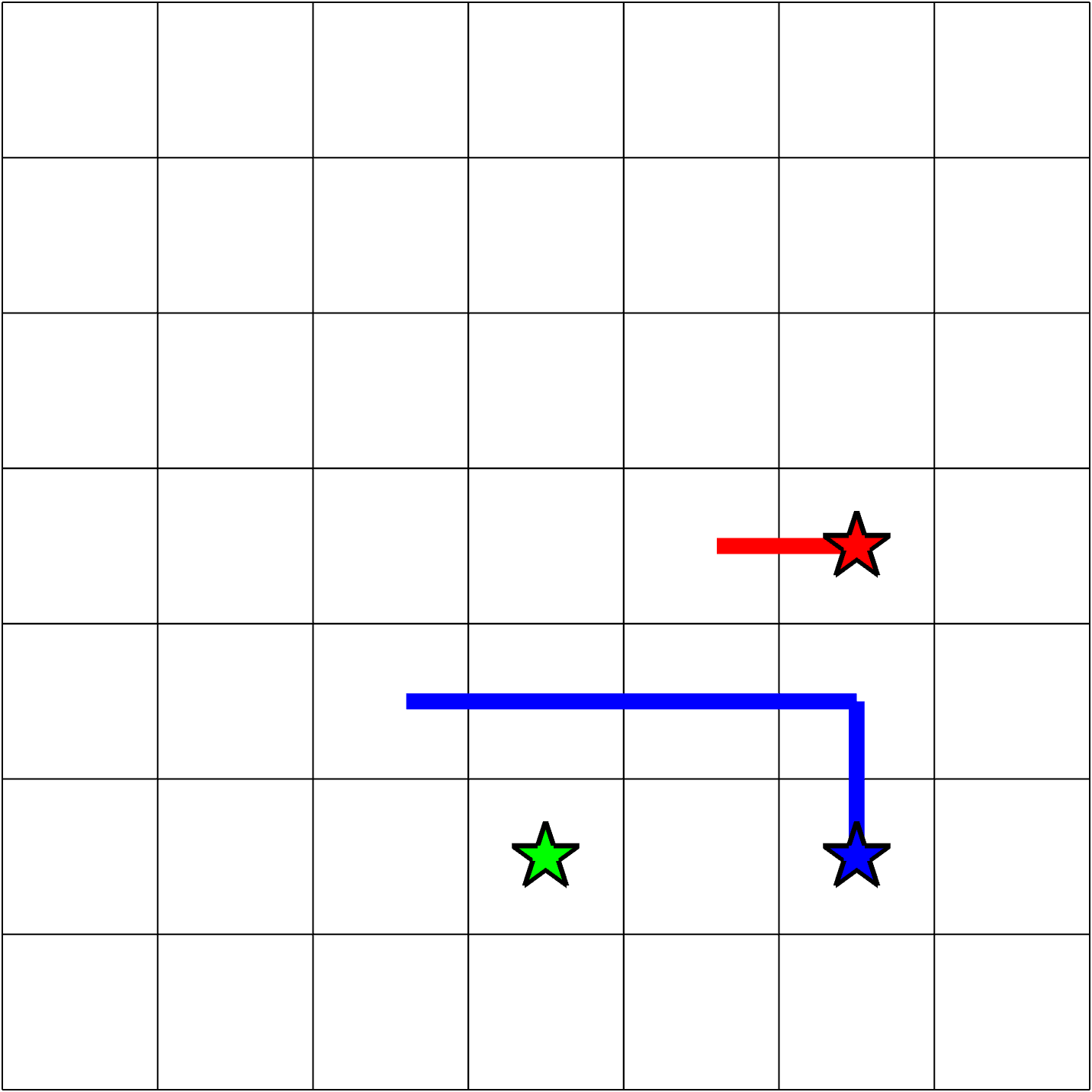

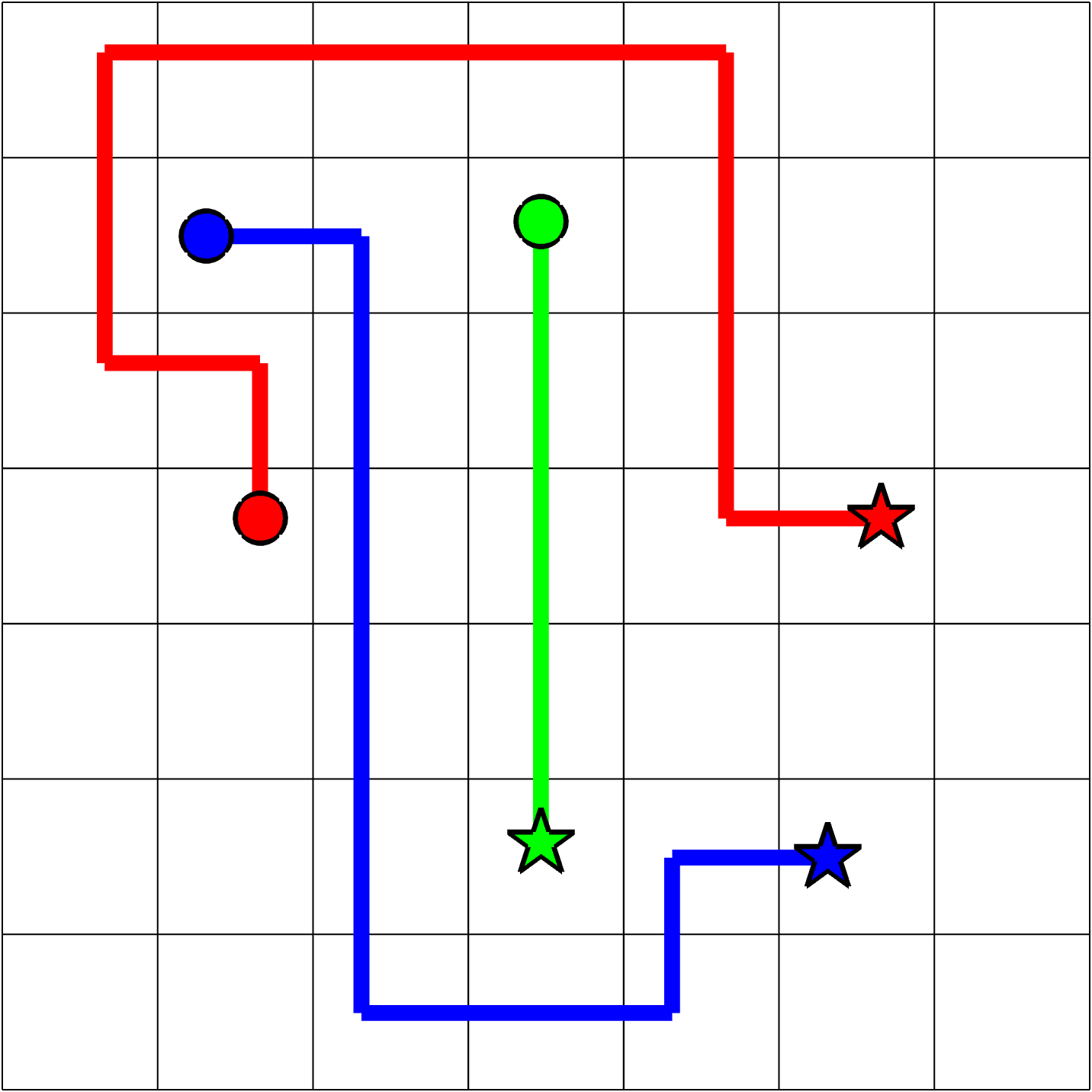

In this work, we consider a decoupled approach to the Explainable MAPF problem. Specifically, we adapt CBS, a two-level algorithm that, in its low-level, plans individually for each agent, and in its high-level, identifies collisions between the agents and places constraints to resolve them in the next low-level iteration. Our main contribution is how we can use similar constraints as those used by CBS to capture segmentation conflicts, namely plans whose plan decomposition is too long (w.r.t. the end-user). We then discuss how to adapt CBS to compute and place these constraints during its search, thus obtaining our new algorithm, dubbed Explanation-Guided CBS (XG-CBS). XG-CBS is capable of returning MAPF plans that can be satisfiably presented to the human end-user. Some example plans from XG-CBS are shown below:

I invite you to investigate any of these great resources to learn more about K-CBS:

- The original XG-CBS ICAPS 2022 Conference Paper

- The most-current implementation of XG-CBS (to my knowledge)